A Generic Superoptimizer

04 Mar 2016

.

posts .

Comments

#Superoptimizer

So I tried to publish this as a journal article, but got tired of the submission process. So here it is! Let me know if you find it interesting!

Developing and evaluating a machine-independent, multi-objective superoptimizer

This article describes the design, development and usage of the generic, multi-objective superoptimizer. This superoptimizer generates optimal programs for any programming language that can be expressed by a context-free grammar for any objective such as finding the smallest, fastest or most energy efficient program. The superoptimizer was developed in Java using the ANTLR language analysis framework. It uses manual pruning and equivalence testing by unit tests to identify programs for further performance and non-functional analysis.

This article also presents a case study with the Lego NXT Mindstorms robot using the LeJOS Java-based firmware. This case study involved analysing a simple “line following program” that failed to perform its intended tasks. The superoptimizer searched for optimal programs by performance, binary file size and program length. The superoptimizer discovered a faster and smaller program that achieved the original program’s task.

1. Introduction

Superoptimization is the process by which an optimal program is discovered by searching the space of all programs that can be expressed in a programming language’s grammar. A machine-independent superoptimizer called the “generic, multi-objective superoptimizer” was developed to explore the potential applications of superoptimization in contemporary software development: in particular for embedded software development where optimal programs may not necessarily be the fastest or smallest programs.

Section 2 of this article establishes the context for superoptimization and prior research into this area. Section 3 describes the research methodology. Section 4 describes the development of the superoptimizer and core processes involved in the superoptimization process. Section 5 describes a case study, involving optimizing a program on the Lego NXT Mindstorms robot running the LeJOS Java firmware is described. Section 6 discusses the learning obtained from the superoptimizer development and case study. Section 7 proposes further areas for research and section 8 concludes the paper.

2. Literature review

Introduction

The term superoptimizer was originally defined by Massalin as a process that “finds the shortest program to compute a function” [Massalin 1987, p. 122]. In Massalin’s paper, this process involved searching the set of all programs defined by a particular processor’s instruction set. According to Massalin the input to a superoptimizer is a program that defines the program’s required functionality and the output of the superoptimizer is another program that not only has the required functionality but also is shorter in length. However Joshi et al suggest that a suite of metrics be used to identify optimal programs as “on [the Motorola 68000 series instruction set], the shortest would also be the fastest, but on multiple-issue architectures this need not be so.” [Joshi et al. 2002, p. 2]

Superoptimizer Limitations

Superoptimizers have not revolutionised software development despite its inherent advantages since its inception in the 1980s for the following reasons:

— Timeliness of results: according to Massalin generating a simple program with 12 instructions takes several hours [Massalin 1987, p. 123];

— Superoptimizing programs with pointers is extremely time consuming as “pointers can point anywhere in memory and so to model a pointer in terms of Boolean expressions one needs to take all of memory into account.” [Massalin 1987, p. 123];

— A machine-independent version of a superoptimizer is limited to very short programs [Massalin 1987, p. 123];

— Joshi et al identified a risk that code generated by a superoptimizer might be functionally correct by passing a series of tests but incorrect by design and risk executing unsafe operations [Joshi et al. 2002, p. 2].

— Superoptimizers are often developed to support compiler development: an esoteric field in its own right.

These limitations have prevented mainstream adoption of superoptimizers in contemporary software development.

Past Superoptimizers

Massalin’s superoptimizer for the Motorola 68020’s instruction set is considered to be the first superoptimizer specifically created for superoptimizing software applications. However, Massalin quotes several authors such as Krumme and Ackley’s “code generator for the DEC-10 computer … based on exhaustive search.” [Massalin 1987, p. 124] and Kessler’s code optimiser translating instruction sequences into one instruction through a template matching process. There have been many superoptimizers written since Massalin’s paper on superoptimizers, including Hume’s review of superoptimizers [Hume 2011], ordered chronologically:

— Granlund and Kenner’s GNU Superoptimizer for “the IBM RS/6000,the Sparc, the Motorola 68k and 88k, the AMD 29k and the Intel 80386”, written in C [Granlund & Kenner 1992, p. 347].

— Joshi et al’s ‘Denali-1’ goal-oriented superoptimizer for the Alpha EV6 processor written in C and Java [Joshi et al. 2002, p. 2]. Joshi et al’s ‘Denali-2’ paper presents a design of a more flexible goal-oriented superoptimizer: using for the Alpha EV6 and potentially for then “upcoming implementations of IA-64” [Joshi et al. 2006, p. 968].

— Basnal and Aiken use superoptimizer techniques for generating peephole optimizers for processors with an x86 architecture [Bansal & Aiken 2006, p. 395], written in C++ and O’Caml [Bansal & Aiken 2006, p. 399]. Their subsequent work involved a prototype binary translator from PowerPC to x86 [Bansal & Aiken 2008].

— Brain et al’s Total Optimisation using Answer Set Technology (TOAST) superoptimizer for the MIPS, SPARC-V7 and SPARC-V8 architectures [Brain et al. 2006, p. 275].

— Sayle used a superoptimizer to optimise multi-way, “switch” statements for GCC on Linux and Debian operating systems with x86 and IA-64 architectures [Sayle 2008].

— The author developed a generic, multi-objective distributed superoptimizer but it was never released to the public and has not been used since its original development [Joseph 2009]. The superoptimizer supported any programming language that could be expressed by a context-free grammar but its implementation was limited to a depth of three statements.

— Serpell wrote a Microchip PIC Microcontroller superoptimizer [Serpell 2009] written in C.

— The “Hacker’s Assistant” for RISC computers with an assembly-like programming language [Warren 2009] written in C.

— Garg and Kumar developed a superoptimizer for the low-level virtual machine (LLVM) project’s implementation of the x86 architecture processors [Garg et al. 2012]. Juház and Kozsik have also integrated superoptimizer techniques into the LLVM project [Juhász & Kozsik 2012], and there are other attempts to integrate superoptimizer techniques into the LLVM [Auler 2011; Regehr 2014].

— Schkufza et al developed the STOKE superoptimizer for x86-64 assembly code which uses a “Markov Chain Monte Carlo sampler to rapidly explore the set of all possible programs, sacrificing search completeness for superoptimizer performance” [Schkufza et al. 2013, p. 2].

— Hume has recently investigated the potential for superoptimizing programs executed by a virtual machine: specifically, Java bytecode executed by a Java Virtual Machine [Hume 2013, p. 10]. Hume’s superoptimizer was implemented in Clojure [Hume 2013, p. 14].

3. Research Methodology

Research Aims

This project aims to reinvigorate interest in superoptimizing for embedded software development through designing and developing a machine-independent superoptimizer that optimizes code against multiple measures, called the generic, multi-objective superoptimizer.

The potential utility of a generic, distributed simulator will be assessed through a case study, optimising a program on the Lego NXT Mindstorms robot running the LeJOS Java firmware.

Research Stages

This research project was executed by the author between 4th quarter 2014 and 2nd quarter 2015, divided into the following stages:

- Design and develop a generic, multi-objective superoptimizer based on previous research by combining the following technologies:

- modern distributed computational technology such as Java’s Collections framework, Apache Spark or Cloudera/Hortonworks pre-configured Apache Hadoop instances,

- compiler and parser toolsets such as ANTLR to facilitate the description and analysis of a programming language’s grammar, and

- cloud-based, infrastructure-as-a-service providers.

- Conduct a case study with the generic, multi-objective superoptimizer: The superoptimizer will be used to superoptimize a path-following program in the Lego NXT Mindstorms robot in its vehicular configuration with the LeJOS 0.0.7 firmware.

- Analyse the results of the LeJOS Lego NXT Mindstorms case study, and discuss potential future applications of the generic, multi-objective superoptimizer.

4. Superoptimizer Development

Superoptimizer Features

It will have the following high-level features to counter the limitations identified in section 2.2:

— Distributing the superoptimizer’s tasks across multiple processors and computers would directly proportionately improve the timeliness of results, using contemporary, “Big Data” technologies for distributing tasks such as Apache Hadoop, Apache Spark and Java’s parallelization framework.

— Making the superoptimizer machine-independent by design gives potential end-users flexibility in designing their superoptimizer activities. The end-user can search a programming language’s entire grammar for the best program expressible by their desired metric. Alternatively, the end user can search a subset of the programming language’s grammar: sacrificing completeness for timeliness.

— Massalin’s issue of program length with machine-independent superoptimizers will be investigated through a case study.

— The generic, multi-objective superoptimizer generates performance and non-functional metrics on programs that meet the functional requirements, allowing end users to optimize programs with respect to a variety of metrics beyond program length or performance.

Following Hume’s definition of pruning and equivalence testing methods [Hume 2011, p. 4], this generic superoptimizer would involve:

— Manual pruning. Specifically, this involves the operator defines the space of programs to test by manually defining the grammar of programs to be tested: an operator seeking mathematical completeness would define the entire programming language’s grammar or an operator can reduce the program space by restricting the grammar used to generate programs.

— Equivalence testing by testing all programs with known inputs and expected outputs. Programs that generate the expected output would be then measured by the desired metric (such as energy used to execute a program if a program is optimised for energy consumption). This approach will be adopted due to its simplicity, relative ease of implementation and parallels with existing unit testing concepts.

Superoptimizer Concepts

The generic, multi-objective superoptimizer introduces the following concepts, based on the author’s previous research [Joseph 2009]:

— A test is a unit test-inspired, functional assessment of a program. With respect to Massalin’s analysis of a superoptimizer’s internals, it is a contemporary interpretation of Massalin’s probabilistic test, where the superoptimizer “run[s] the machine code for the program being tested a few times with some set of inputs and check whether the outputs match those of the source program” [Massalin 1987, p. 123].

— A scenario is a performance or non-functional metric of a program. It is inspired by the author’s previous research into register machines where the author searched for programs according to non-functional metrics, namely nontrivial behaviour as expressed through complexity and randomness [Joseph 2013, pp. 106-107, 134]. For example, these metrics (or cost functions as defined by Hume) could be program length (expressed as lines of code), execution time, or energy efficiency [Hume 2013, p. 52; Joseph 2009].

Superoptimizer Inputs

The generic, multi-objective superoptimizer requires the following inputs:

— Superoptimizer settings, including:

— A maximum duration for a test or scenario to run for a particular input program.

— The number of instances assigned for distributing tests or scenarios. The number of hardware devices used in the superoptimizer experiments or maximum number of processors on the machine running the superoptimizer determines the number of instances.

— A starting rule which the superoptimizer uses as an initial point for generating programs.

— An ANTLR grammar file that defines the structure of a programming language. However, unlike standard ANTLR grammar files, the input grammar file also contains a list of variables used to build programs.

— A configuration for a test and scenario. These configurations consist of:

— A Freemarker template, which allows generated programs to be inserted into code used by tests or scenarios.

— A Windows batch script or Linux shell script that performs the test or scenario. Tests return either a “true” or “false” if the generated program’s output matches the source program, with optional supporting results such as the number of failures encountered. Scenarios return a numerical or string value based on the particular metric chosen by the end-user.

— Other supporting files such as libraries required for compiling tests or scenario.

These inputs are reflected in the configuration file used for the superoptimizer[1].

Superoptimizer Process

Grammar File Parsing

The ANTLR grammar file is parsed by the antlrv4parser package in the generic, multi-objective superoptimizer. ANTLR was chosen as both the tool for parsing input grammar files and for expressing programming languages due to the project’s maturity, the high prevalence of ANTLR grammar files for various programming languages[2] and the similarity of the ANTLR parser and lexer files to Extended Backus Naur Form. The antlrv4parser package is a compiled lexer and parser of the ANTLR version 4-grammar lexer and parser files[3]. Currently, the superoptimizer supports simple ANTLR version 4 parser constructs.

— Parser Rules,

— References to Parser Rules,

— Terminal (specifically, constants in a parser rule), and

— Lists of alternative terminals or references to parser rules.

Program Building

The parsed ANTLR grammar file is used as input to the ProgramBuilder package, which is responsible for building all possible programs expressible in the grammar file.

Let us consider a simplistic ANTLR version 4 grammar. This grammar defines an addition and subtraction statements that can read and write to two variables: alpha and bravo. The following code listing expresses the grammar in the ANTLR version 4 syntax.

Code Listing 1. ANTLR Grammar File Example

grammar example\_grammar;

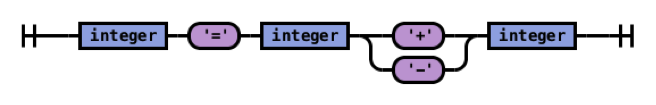

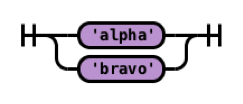

statement : integer ‘=’ integer ( ‘+’ | ‘-‘ ) integer;

integer : 'alpha' | 'bravo'

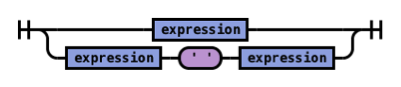

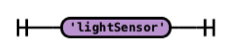

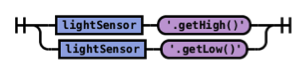

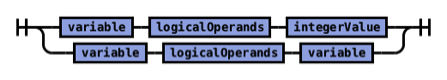

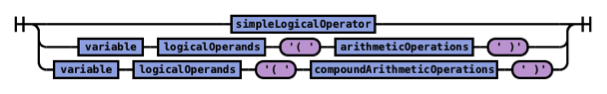

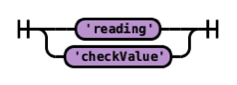

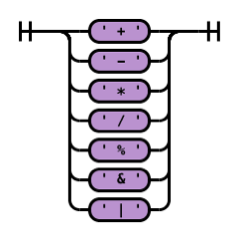

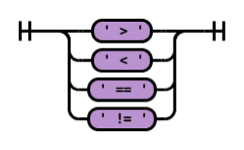

```This example grammar uses all ANTLR version 4-parser constructs the generic, multi-objective superoptimizer supports. The following images express the grammar as a syntax (or railroad) diagram[\[4\]](#_ftn4).

Statement

Integer

Fig. 1. Example grammar expressed in a syntax diagram

The superoptimizer generates the Cartesian product of potential integer values, substituting these values into the respective “integer” rule references. The following table shows all 16 possible programs generated by the superoptimizer that could be expressed by the example grammar.

Table I. Programs generated by the superoptimizer using the example grammar

alpha=alpha+alpha

alpha=bravo+alpha

bravo=alpha+alpha

bravo=bravo+alpha

alpha=alpha+bravo

alpha=bravo+bravo

bravo=alpha+bravo

bravo=bravo+bravo

alpha=alpha-alpha

alpha=bravo-alpha

bravo=alpha-alpha

bravo=bravo-alpha

alpha=alpha-bravo

alpha=bravo-bravo

bravo=alpha-bravo

bravo=bravo-bravo

### Test Execution

The generic, multi-objective superoptimizer sends the programs generated by the _ProgramBuilder_ package to the _TestRunner_ package. The _TestRunner_ package splits the set of generated programs across the instances defined by the end-user. On a per-program basis, the TestRunner package inserts a program into a FreeMarker template and executes an external process. An exemplar use-case involves the populated FreeMarker template becoming compliable or interpretable source code being compiled, interpreted and run by the external process (typically a script).

The external process needs to return either “true” or “false” to the superoptimizer, indicating whether the generated program’s output matched the source program’s output. Optional text, such as the number of test failures encountered can be appended to the output for further analysis. Successful programs: that is programs that return “true” are returned to the superoptimizer.

### Scenario Execution

The _ScenarioRunner_ package performs the non-functional analysis of successful programs. The scenario execution works in an identical way to the _TestRunner_ package with the exception that the _ScenarioRunner_ package can receive any text output from an external process. For example, in the case study the scenario calculates the program size and average program execution time, returning this data as two integers separated by a colon. This design facilitates the multi-objective optimization aspect of the superoptimizer as the _ScenarioRunner_ allows multiple metrics to be calculated on generated programs that pass the tests executed by the _TestRunner_.

### Output

The generic, multi-objective superoptimizer generates the following output:

— A list of all programs generated by the ProgramBuilder package.

— The results from the tests performed on all generated programs.

— The results from the scenarios performed on all generated programs that passed the previous tests.

— A system log generated by the log4j package.

Similarities and differences with past superoptimizers

------------------------------------------------------

Granlund and Kenner’s GNU Superoptimizer is well cited and regarded \[Bansal & Aiken 2006, p. 402; Crick et al. 2006, p. 272; Joshi et al. 2002, p. 2; Sayle 2008, p. 110\] especially for its contributions to the GNU C compiler, following Massalin’s definition of optimal programs having the shortest sequence \[Granlund & Kenner 1992, p. 345\] However, the generic, multi-objective superoptimizer provides a framework for alternate metrics for measuring code beyond code length or performance.

Joshi et al’s superoptimizer source programs are expressed in C language \[Joshi et al. 2002, p. 5\], and their Denali-2 uses a proprietary language \[Joshi et al. 2006, pp. 970-976\] to generate optimal code that “minimal number of instructions”, and postulate an alternate metric of “ number of extra registers” \[Joshi et al. 2006, p. 969\]. Brain et al’s TOAST tool follows a similar approach to the Denali approach \[Brain et al. 2006, p. 273\], only assessing program performance.

Bansal and Aiken focus on developing peephole superoptimizers specifically for the x86 architecture processors \[Bansal & Aiken 2006, p. 395\], whereas the generic, multi-objective superoptimizer can be used for superoptimizing any programming language that can be expressed with a context-free grammar.

Unlike Schkufza et al’s use of “a Markov Chain Monte Carlo sampler” to improve superoptimizer performance, the generic, multi-objective superoptimizer allows a developer to improve performance by excluding possible operations from the space of all possible programs. However the generic, multi-objective superoptimizer capitalizes on Schkufza et al’s observations by excluding possible operations sacrifices completeness for superoptimizer performance.

Sayle takes a unique approach to optimizing multiway selection code through known constructs and superoptimization: using a Paerto frontier for assessing multiway selection code for performance and code size \[Sayle 2008, p. 111\]. The generic, multi-objective superoptimizer attempts to allow any metric to be considered for measuring non-functional attributes of generated programs, which builds on Sayle’s definition of an objective function “that is used to assess the merit of each \[program\]” \[Sayle 2008, p. 110\]: optimizing code size involves assessing program size in bytes, optimizing performance involves measuring the amount of time required to execute the program.

Development Process

-------------------

The generic, multi-objective superoptimizer was developed on Mac OSX Yosemite and Windows 8.1 from 29 November 2014 to 23 February 2015. The history of the software development process is available on the project’s GitHub page[\[5\]](#_ftn5).

5. Case Study

===============

Introduction

------------

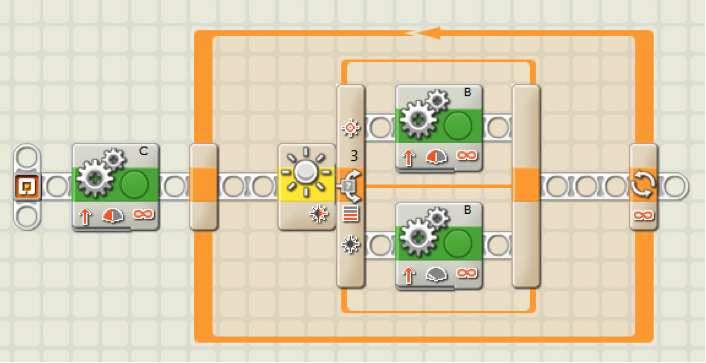

The following case study involves using the generic, multi-objective superoptimizer to optimize a program written for the Lego NXT Mindstorms robot. The author configured the Lego NXT Mindstorms robot in its vehicular, “Tribot” configuration \[Lego 2006\] with the Lego-provided firmware and installed a sample program.

Fig. 2. Tribot Program Source Code.

The sample program in Figure 2 drives the robot around an oval racing track on the Mindstorms Test Pad 8527, turning upon detecting a black line. The robot completes the manoeuvre at slower speeds, but if the robot’s speed exceeds 66% of its maximum speed the robot fails to complete its manoeuvre and leaves the racetrack. The author rewrote the program for in Java for the LeJOS 0.0.7 firmware (an extract is shown in Code Listing 2) and the Lego NXT Mindstorms robot exhibited the same behaviour[\[6\]](#_ftn6), as shown in the series of still video images in Figure 3. This issue does not appear in subsequent versions of the LeJOS firmware.

Code Listing 2. Extract of the Tribot program rewritten for the Java-based LeJOS framework

```

while ( !Button.LEFT.isPressed() ) {

reading = lightSensor.readNormalizedValue();

displayMainInformation( appName, lightSensor, reading, checkValue );

// superoptimised function start

if ( reading > ((lightSensor.getHigh() + lightSensor.getLow()) / 2 )) {

Motor.B.forward();

Motor.C.forward();

} else {

Motor.B.forward();

Motor.C.stop();

}

// superoptimized function stop

}

```Fig. 3. Lego NXT Mindstorms Robot failing to follow a line at 66% maximum speed.

\[gallery ids="260,262,257,259,258,261,264,263" type="rectangular"\]

A video of this demonstration is available on the author’s Vimeo channel[\[7\]](#_ftn7).

This implies that there are inefficiencies in the Lego-provided firmware and the LeJOS 0.0.7 firmware resulting in the robot being unable to complete the “line following routine” and complete the turn if its speed exceeds 66% of the maximum speed.

This case study will provide a practical demonstration of the use of the generic, multi-objective superoptimizer in optimizing the “line following program” through a laboratory experiment.

Experiment Plan

---------------

The aim of the case study is to test the following hypothesis:

Hypothesis 1. There exists a program, expressed in the Java-based LeJOS framework that performs the “line following program” faster than the existing program.

### Equipment Used

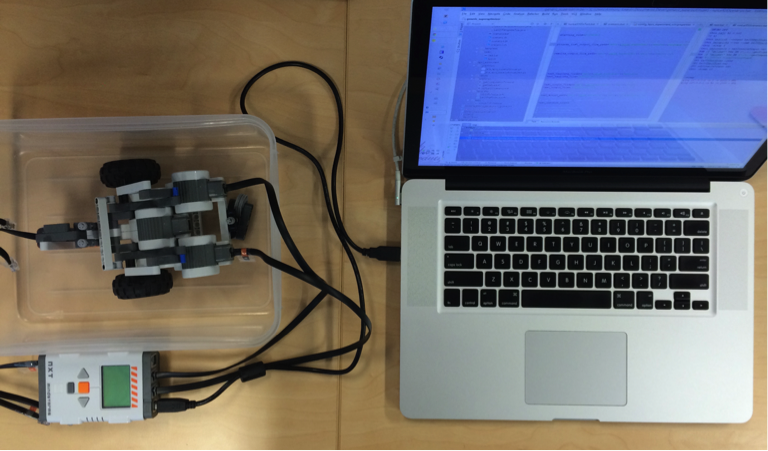

— The Lego NXT Mindstorms robot in its “tribot” configuration with non-rechargeable alkaline batteries.

— A MacBook Pro (15-inch, Mid 2012 edition) 2.6 GHz Intel Core i7 processor with 16GB memory running Windows 8.1 (64-bit) and Java (both 32-bit and 64-bit editions) with the latest service packs and updates installed. The MacBook Pro requires the LeJOS 0.0.7 framework and Lego NXT Mindstorms 64-bit drivers to be installed[\[8\]](#_ftn8).

— The generic, multi-objective superoptimizer installed on the MacBook Pro[\[9\]](#_ftn9).

— A USB 2.0 A-to-B cable connecting the Lego NXT Mindstorms robot to the MacBook Pro.

— An air-conditioned office environment.

The configuration of the experiment environment is shown below.

Fig. 4. Experiment configuration and environment.

### Method

The experiment would validate the hypothesis by conducting a test and multiple scenarios with the generic, multi-objective superoptimizer. The tests would assess whether programs generated by the grammar in Appendix A pass a series of unit tests executed by the Lego NXT Mindstorms robot running the LeJOS 0.0.7 firmware.

The scenarios would involve measuring the following attributes of valid programs:

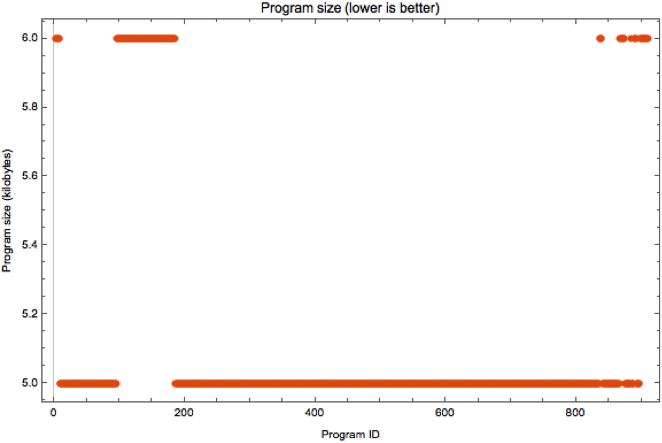

— The program’s average computation time in milliseconds from a 1000 samples,

— The compiled, binary file size of the compiled program (specifically, the “.nxj” object code that is deployed on the Lego NXT Mindstorms robot: not the “.class” file created by the Java compiler), and

— The program length, expressed in lines of code. This measure considers “if-then”, “if-then-else”, “do-while”, “while-do” and unary “?” constructs as well as any statement that ends with a semicolon as a line of code.

The optimal program would be the program with (in the following order):

— The shortest average computation time,

— The smallest compiled binary file size, and

— The shortest program length.

The previously mentioned GitHub release contains the test and scenario scripts as well as other relevant templates and scripts.

### Assumptions

Independence between programs executing is assumed, specifically that the following factors do not impact on the results obtained such as wear-and-tear and changes in battery life due to the continuous use of the sensors and motors. During the experiment battery life did not reduce below 50%, there was no physical damage observed on the robot or accessories and there was no consistent trend observed in measurements (such as a continually increasing computation time due to a lack of power), therefore these factors did not have an impact on the results obtained.

It is also assumed that any other optimization processes (such as peephole optimization) performed by the compiler are consistently applied during the compilation process. This assumption means that the independent variable (the set of programs generated by the superoptimizer based on the grammar provided) is the only factor being changed, making the dependent variables (average computation time, compiled binary file size and program length) altered by the independent variable.

Given that the grammar defined in Appendix A is not the complete Java grammar including the LeJOS framework, a globally optimal solution may not be obtained from this experiment. This assumption is valid as the objective of the experiment is not to find the optimal program but to find a program that solves the technical issue.

Finally, it is assumed that the following issues did not affect the quality of the results obtained.

Issues Encountered

------------------

### Lego NXT Mindstorms Robot Test and Scenario Execution Time

Executing tests and scenarios on the Lego NXT Mindstorms robot involved the following process, as implemented in the respective scripts:

— The generated program is substituted into the FreeMarker template.

— The LeJOS compiler (nxjc.bat) is called, creating a Java “.class” file.

— The LeJOS linker (nxj.bat) is called, creating a “.nxj” compiled binary file.

— The LeJOS uploading program (nxjupload.bat) is called, uploading the “.nxj” file onto the Lego NXT Mindstorms robot and running the file.

— The LeJOS remote console (nxjconsole.bat) is called, opening a USB-based connection to the Lego NXT Mindstorms allowing for data (such as the number of failures encountered during the tests or average computation time) to be transferred from the robot to the computer.

This process took approximately 5 seconds due to a delay being required for the Lego NXT Mindstorms to install the downloaded binary file, start the test or scenario application and initiate a “server” for the computer to connect to. If the LeJOS remote console tried to connect to the Lego NXT Mindstorms robot before the server was ready the connection fails and a “false positive” error occurs.

However, the superoptimizer generated 21,440,680 programs[\[10\]](#_ftn10) requiring up to 41 months to complete.

Therefore, the author developed a simple LeJOS simulator[\[11\]](#_ftn11): this simulator used the lejos.\* packages in the LeJOS 0.0.7 build but removed all functions that communicated to the Lego NXT Mindstorms robot. This simulator did not offer any emulation of the robot or its environment but did provide a functionally equivalent environment to run tests against. While tests required approximately 1.5 seconds, a software-only simulator meant that the task could be parallelized between the eight available logical cores, reducing the time to conduct the tests to 46 days.

The author deviated from the original experiment by introducing a two-step equivalence test: generated programs would be tested on the simulator and programs that pass the tests run in the simulator would be tested on the Lego NXT Mindstorms robot. This allowed the author to capitalize on the performance of the testing but eliminate the potential for Type 2 error (that is, a program passes the tests on the simulator but fails to pass tests on the Lego NXT Mindstorms robot). Type 1 error (that is, a program that would pass tests on the Lego NXT Mindstorms but does not pass the tests on the simulator) was considered acceptable, as a mathematically optimal solution was not expected.

### Infinite Loop Detection Failure

A defect was detected on 17 March 2015, where in the generic, multi-objective superoptimizer, which prevented detecting infinite loops in generated programs. For example, programs such as the following program continue indefinitely and the Apache Commons Exec framework’s watchdog timer would never halt the test.

Code Listing 3. Example program that failed to halt due to an infinite loop

```

while ( reading != lightSensor.getHigh() ) { lightSensor.setFloodlight( false ); }

```This issue was resolved by the following two fixes:

— Programs that followed the halted programs and contained “while” or “do- while” loops were skipped.

— A new feature allowing the generic, multi-objective superoptimizer to restart the superoptimizer test or scenario at a particular program (on a per-process basis if parallelization was enabled) was added.

The experiment was restarted on 4 April 2015 with the new version of the superoptimizer from program number 1,181,392 of 1,340,042 on a per-logical process basis[\[12\]](#_ftn12). Omitting programs was chosen as a conservative approach to resolving this issue, but this was accepted, as global optimal solutions were not expected for this experiment.

### Lego NXT Mindstorms Robot Reliability

The previously mentioned “compile-build-upload” process for installing compiled programs based on the generated programs failed several times, resulting in a Java exception being reported by the LeJOS firmware running on the Lego NXT Mindstorms robot.

This occurred during running tests and scenarios, requiring a new feature being added to the superoptimizer to run either tests, scenarios or both tests and scenarios: depending on whether the experiment failed during a test or scenario[\[13\]](#_ftn13).

The only intervention that was required was resetting the superoptimizer and the Lego NXT Mindstorms robot to resume the experiment after a failure occurred.

### Discontinuous Superoptimizer Operation

The generic, multi-objective superoptimizer was originally developed in mind that an experiment would be conducted in a single, continuous session. However the multiple issues encountered meant that the final output created by the superoptimizer did not contain the complete experiment results. This issue has no impact on the quality of the experiment or results: results were obtained by searching the log files generated for test and scenario results.

Results

-------

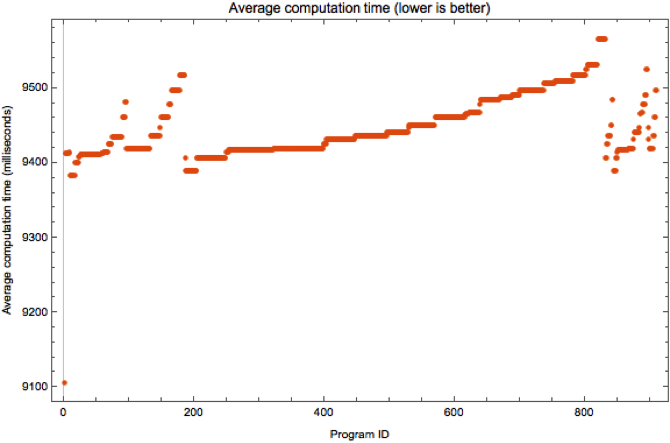

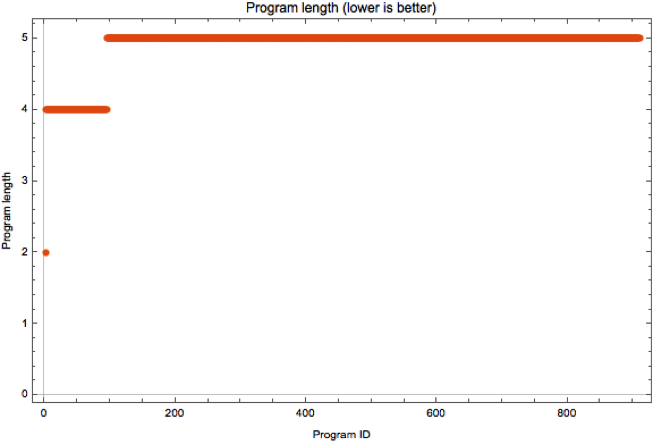

Excluding the time required to detect and resolve the aforementioned issues, the case study took 28 days to execute. Out of the 21,440,680 programs generated, 910 programs passed the tests on both the simulator and the Lego NXT Mindstorms robot. The following scatter plots show the overall distribution of results, organized by the scenario’s respective metrics.

Fig. 5. Average Computation Time versus Program ID Scatter Plot

Fig. 6. Program Length versus Program ID Scatter Plot

Fig. 7. Program Size versus Program ID Scatter Plot

The outlier program in Figure 5 and 6 is shown below.

Code Listing 4. Optimal program obtained from superoptimizer experiment

```

Motor.C.setPower( ( ( reading > checkValue ) ? DEFINED\_POWER : 2 ) );

```On average, the program in Code Listing 4 performed 300ms faster than other generated programs and was the shortest program, by program length.

When the program was substituted for the ported code on the Lego NXT Mindstorms robot in Code Listing 2[\[14\]](#_ftn14) the Lego NXT Mindstorms robot in its Tribot configuration was able to complete its manoeuvre as shown below.

\[gallery ids="290,286,291,293,288,287,289,292" type="rectangular"\]

Fig. 8. Lego NXT Mindstorms Robot successfully turning at 66% maximum speed

A video of this demonstration is available on the author’s Vimeo channel[\[15\]](#_ftn15).

6. Discussion

===============

Improve Lego NXT Mindstorms performance by reducing hardware instructions

-------------------------------------------------------------------------

An often-taught rule in embedded software development is to reduce the number of functions that require access to hardware (such as the functions on the Lego NXT Mindstorms robot that detect light observed by the light sensor or control the motor's speed). A direct correlation (P-value: 0.000355 and 4.76×10\-12) was observed between the number of times functions requiring the “C” motor and the light sensor were invoked and the average program execution time. Paradoxically, the number of times functions requiring the “B” motor was inversely correlated (P-value: 1.19×10\-47) to the average execution time. Unfortunately there was insufficient time to investigate this anomaly.

Tests should combine software emulation with hardware simulation

----------------------------------------------------------------

The process for equivalence testing used to resolve one of the experimental issues was inspired by Bansal and Aiken’s two-step equivalence test, where an initial execution test for “fingerprinting” is used to quickly scan the space of potential programs using a unit-testing methodology \[Bansal & Aiken 2006, p. 399\]. This process of testing the space of generated programs for equivalence in a synthetic environment and then using the physical system for detailed analysis greatly reduced the time to conduct experiments and did not reduce the quality of the results obtained, assuming Type 1 and Type 2 errors are sufficiently managed.

Machine independent superoptimizer potential

--------------------------------------------

The simple grammar defined in Appendix A generated programs between 2 and 5 lines of code: these programs are of similar length to the programs in Massalin’s paper. A limiting factor on the number of programs the superoptimizer can generate is the amount of memory available to the superoptimizer: this is because the superoptimizer stores all generated programs in memory. For instance, all 21,440,680 programs generated with the grammar required all 16 Gb memory available during the case study. Therefore, there is a direct correlation between the grammar complexity, superoptimizer memory and the program length. If an end-user had a large amount of memory available with a small grammar (such as those offered on microcontrollers with a reduced instruction set) then longer programs could be generated by the superoptimizer.

Superoptimization should increase learning about the experimentation environment rather than just find optimal programs

-----------------------------------------------------------------------------------------------------------------------

The author takes a different philosophical view to existing superoptimizer research: the focus should be on understanding why the “best” program was discovered, not just limited to finding the “best” program according to the end user’s metric. In particular, why using different statements in a programming language can perform the same function but improve a non-functional (such as code size or performance) metric.

The author believes this provides more value to developers, as one needs to manually check superoptimizer output to ensure correctness \[Granlund & Kenner 1992, p. 351\] as superoptimizers exploit “side-effects of the hardware architecture in unexpected ways” \[Hume 2013, p. 9\] and developers need to be aware of the impact of these side effects raised by Joshi et al \[Joshi et al. 2002, p. 2\]. For example, the superoptimizer functions could introduce security vulnerabilities through direct memory manipulation instead of using secure functions offered by the programming language. In addition, the end user needs to be aware that any optimization may be specific to their particular physical or technical environment and may not be generalizable to all contexts, especially where the metrics are sensitive to environment such as heat dissipation and energy consumption.

7. Future Research Directions

===============================

The generic, multi-objective superoptimizer can be improved in the following ways:

— Refactor the superoptimizer to include the two-step equivalence testing process.

— Given the superoptimizer was developed in Java using its parallelization framework the first, software-only equivalence test can be distributed using a distributed framework such as Apache Spark or Apache Hadoop. This could be deployed on a cloud-based provider such as Amazon Web Services to reduce the time required to search for programs.

— Increase the compatibility the ANTLR grammar parsing to include more ANTLR grammar constructs.

— Implement the more sophisticated program generation and testing methodologies from the literature review.

— Improve the output to be more resilient to failure such as exporting intermediate results if a failure during the experiment occurs and resuming experiments without significant user intervention.

— Perform the Pareto multi-objective optimization within the tool or export the results into more formats beyond comma-separated values or system logs.

Finally, more experimentation could be conducted on alternate processors, environments and programming languages measuring alternate metrics such as energy consumption or heat dissipation.

8\. Conclusion

================

The generic, multi-objective superoptimizer was successfully developed. The Lego NXT Mindstorms case study was conducted, successfully proving the hypothesis and finding that superoptimization can be used in a modern context. Further development of the superoptimizer is recommended to capitalize on contemporary improvements to the superoptimization processes. Continued research into alternate metrics such as energy consumption and heat dissipation is also recommended to discover new superoptimizer applications in contemporary embedded software development.

DISCLOSURE STATEMENT

====================

The author privately funded and resourced this research project. The author has no commercial relationship with any of the products or trademarks referenced in this article.

ACKNOWLEDGMENTS

===============

Trademarks mentioned in this article are the respective owners of Amazon, the Apache Software Foundation, Apple, GitHub, Lego, Microsoft, Oracle and Wolfram Research.

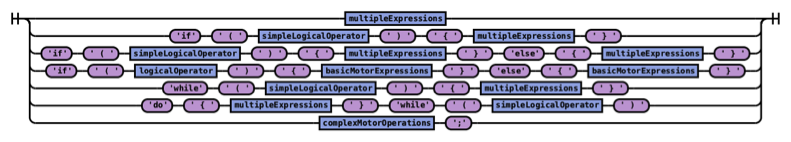

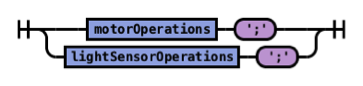

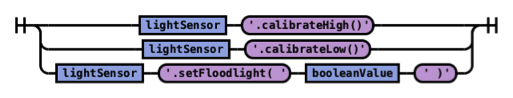

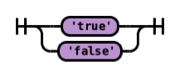

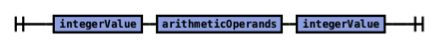

APPENDIX A – LeJOS Java Syntax

```

statement

multiple

Expressions

```

```

```

```

expression

```

```

```

```

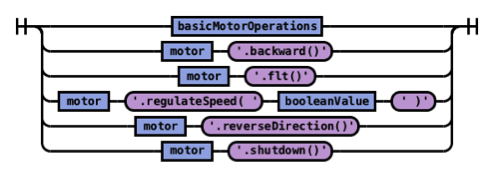

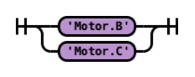

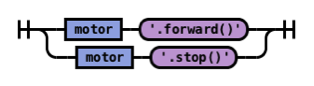

motor

Operations

basicMotor

Expressions

```

```

```

```

lightSensor

```

```

```

```

lightSensor

Functions

ReturningAn

Integer

lightSensor

Operations

```

```

```

```

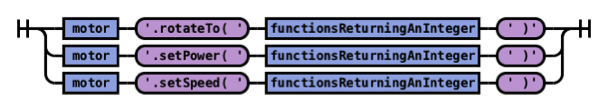

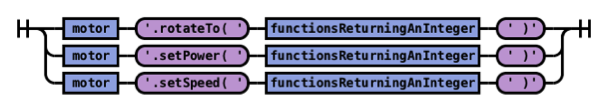

motor

```

```

```

```

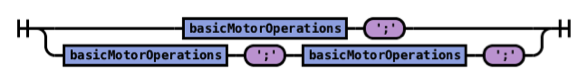

basicMotor

Operations

complexMotor

Operations

```

```

```

```

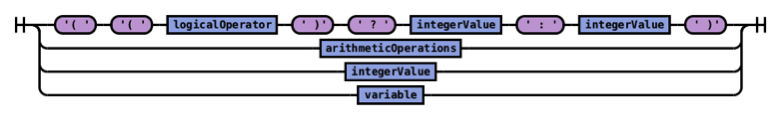

functionsReturningAnInteger

simpleLogical

Operator

```

```

```

```

logical

Operator

compoundArithmeticOperations

arithmetic

Operations

```

```

```

```

booleanValue

```

```

```

```

integerValue

```

```

```

```

variable

```

```

```

```

arithmetic

Operands

logical

Operands

REFERENCES

[1] Massalin, H. Superoptimizer: a look at the smallest program. SIGPLAN Notices, 22, 10 1987), 122-126.

[2] Joshi, R., Nelson, G. and Randall, K. Denali: a goal-directed superoptimizer. In Proceedings of the Proceedings of the ACM SIGPLAN 2002 Conference on Programming language design and implementation (Berlin, Germany, 2002). ACM, [insert City of Publication],[insert 2002 of Publication].

[3] Hume, T. Literature review: Superoptimizers. School of Information Sciences, University of Tampere, City, 2011.

[4] Granlund, T. and Kenner, R. Eliminating branches using a superoptimizer and the GNU C compiler. In Proceedings of the Proceedings of the ACM SIGPLAN 1992 conference on Programming language design and implementation (San Francisco, California, United States, 1992). ACM, [insert City of Publication],[insert 1992 of Publication].

[5] Joshi, R., Nelson, G. and Zhou, Y. Denali: A practical algorithm for generating optimal code. ACM Transactions on Programming Languages and Systems (TOPLAS), 28, 6 2006), 967-989.

[6] Bansal, S. and Aiken, A. Automatic generation of peephole superoptimizers. SIGPLAN Notices, 41, 11 2006), 394-403.

[7] Bansal, S. and Aiken, A. Binary Translation Using Peephole Superoptimizers. City, 2008.

[8] Brain, M., Crick, T., De Vos, M. and Fitch, J. TOAST: Applying Answer Set Programming to Superoptimisation. Springer Berlin Heidelberg, City, 2006.

[9] Sayle, R. A. A superoptimizer analysis of multiway branch code generation. City, 2008.

[10] Joseph, A. The Scenario Analyser and Test Executor: Applications of Superoptimising and Test-driven Development in Distributed Embedded Software Development. University of Technology, Sydney, 2009.

[11] Serpell, D. Superoptimizer for Microchip’s PIC microcontrollers. Google Pages, City, 2009.

[12] Warren, H. Hacker’s Delight. Henry Warren, City, 2009.

[13] Garg, A., Kumar, U. and Bansal, S. Superoptimization based LLVM to x86 Compiler2012).

[14] Juhász, D. and Kozsik, T. Superoptimization in LLVM. In Proceedings of the 9th Joint Conference on Mathematics and Computer Science (Siófok, Hungary, 9-12 February, 2012), [insert City of Publication],[insert 2012 of Publication].

[15] Auler, R. Superoptimization for LLVM IR. IC-UNICAMP Brazil, City, 2011.

[16] Regehr, J. Let’s Work on an LLVM Superoptimizer. Embedded in Academia, City, 2014.

[17] Schkufza, E., Sharma, R. and Aiken, A. Stochastic superoptimization. City, 2013.

[18] Hume, T. Is superoptimisation a viable technique for virtual machines? , University of Sussex, 2013.

[19] Joseph, A. Discovering nontrivial and functional behavior in register machines. Complex Systems, 22, 2 2013), 101-149.

[20] Crick, T., Brain, M., Vos, M. D. and Fitch, J. TOAST: Applying Answer Set Programming to Superoptimisation, 2006.

[21] Lego LEGO.com MINDSTORMS Meet The Robots Program. Lego, City, 2006.

[1] An example configuration file is available on GitHub, at https://github.com/ajosephau/generic_multiobjective_superoptimizer/blob/master/conf/properties/config_lejos_experiment_win.properties

[2] These grammar files are on GitHub, at https://github.com/antlr/grammars-v4.

[3] The ANTLR version 4 grammar lexer and parser specification are available on GitHub, at https://github.com/antlr/grammars-v4/tree/master/antlr4.

[4] The ANTLR version 4 railroad diagram builder program was used to generate the syntax diagrams, available on GitHub at https://github.com/bkiers/rrd-antlr4.

[5] The generic, multi-objective superoptimizer is available on the author’s GitHub site: https://github.com/ajosephau/generic_multiobjective_superoptimizer.

[6] The source code for this LeJOS rewrite is available as a GitHub Gist, at https://gist.github.com/ajosephau/35de84e202390a921c13. The companion “CommonNXTTasks.java” is required for compilation and is included for completeness.

[7] The video is available at: https://vimeo.com/125115173.

[8] The method for setting up the LeJOS framework on a 64-bit, Windows 8.1 operating system are available on the author’s personal blog: https://anthonythecoder.wordpress.com/2015/01/01/installing-lejos-for-mindstorms-nxt-on-windows-8/.

[9] The versions of the generic, multi-objective superoptimizer used in this experiment are captured as GitHub releases, available at: https://github.com/ajosephau/generic_multiobjective_superoptimizer/releases/tag/v0.1-alpha.

[10] A complete list of all programs developed is available at: https://github.com/ajosephau/generic_multiobjective_superoptimizer/releases/download/v0.1-alpha/list.of.all.programs.zip

[11] This superoptimizer version is captured in revision #3ffc91b at: https://github.com/ajosephau/generic_multiobjective_superoptimizer/commit/3ffc91baa623e45ea45b74621f385ee7cb5396dd.

[12]This superoptimizer version is captured in revision #4630d96 at: https://github.com/ajosephau/generic_multiobjective_superoptimizer/commit/4630d96c4249f2b2c0080e0a22997c3051fe9cb7.

[13] This superoptimizer version is captured in revision #6d58cd4 at: https://github.com/ajosephau/generic_multiobjective_superoptimizer/commit/6d58cd48635902a8d4797495e166581615d27425.

[14] The optimized program is included in the source code at: https://gist.github.com/ajosephau/ac97cb6841b1b5ae11fa.

[15] The video is available at: https://vimeo.com/125115174.

Comments:

#### Cristian Vasile -

Dude, The guys behind SPARK are working hard to optimize Spark SQL and other Pyhon libraries using LLVM and a Rust framework called WELD https://weld-project.github.io/ Your ideas and expertise could boost the speed of WELD’s generated machine code. Also Databricks provide free accounts on their Spark cluster, run your experiments on DB cloud for zero costs. Regards Cristian